Government agencies know what they are up against. They know that malicious actors are leveraging artificial intelligence to launch a higher volume of attacks and to make attacks more targeted and harder to detect. And they know the threats will only get worse. Given the constraints on their staffing and budgets, they also know AI is essential to strengthening their cyber posture.

The good news is that cyber experts across the public and private sectors are working furiously to help agencies keep pace in the AI cyber arms race. Here are some ways AI is helping the effort.

Four Stress Points When Building AI-Driven Cyber Defenses

Although AI can go a long way toward strengthening cyber operations, it comes at a price. Recent research shows what challenges often catch organizations off guard:

Increased Demands on Network/Hardware Infrastructure. As any data center operator would tell you, AI-based solutions require more computational power, processing capabilities and memory, and the bigger the model, the greater the implementation costs.

Increased Concerns About Physical Security. Not every malicious actor will attack a data center through the internet. Some just break in through a back door. The risk is not just to the core hardware and software, but also to cooling systems, power backups and utility connections.

More Complex Data Management. Typical AI models require massive datasets, and that data must be high-quality, complete and error-free. Data quality has always been important, but now the stakes are higher because unreliable data translates into unreliable AI outputs.

Exacerbated Staffing Challenges. An AI-based cybersecurity initiative is inherently a multidisciplinary affair, involving project managers and people with expertise in cybersecurity, AI and data. For organizations already struggling to staff technical experts, this can feel overwhelming. But there is an upside: Once in place, AI-based automation can ease the burden on security teams.

New Hope for Operational Technology?

Operational technology, such as industrial systems and controllers, utilities, and sensors, is a big vulnerability across government and the critical infrastructure sector. These systems are increasingly connected to the internet but often lack robust security measures. AI could help fill that gap, according to the SANS Institute.

Potential uses include:

- Enhancing threat detection

- Guiding and accelerating response efforts

- Improving vulnerability management

- Helping engineers adapt to evolving cyber threats

Revisiting The Weakest Links (i.e., Us)

The market for AI-based security solutions is still taking shape, and organizations are exploring how AI might or might not help. But of all its potential benefits, perhaps the most tantalizing is this: reducing the damage resulting from simple human error. “Whether it is a decision-based mistake, a skill-related oversight, or an error in task execution, intentional or not, eliminating the potential for human error is the initial step in establishing a well-protected cyber environment,” researchers say.

A New Cyber Concern: The Security of AI Itself

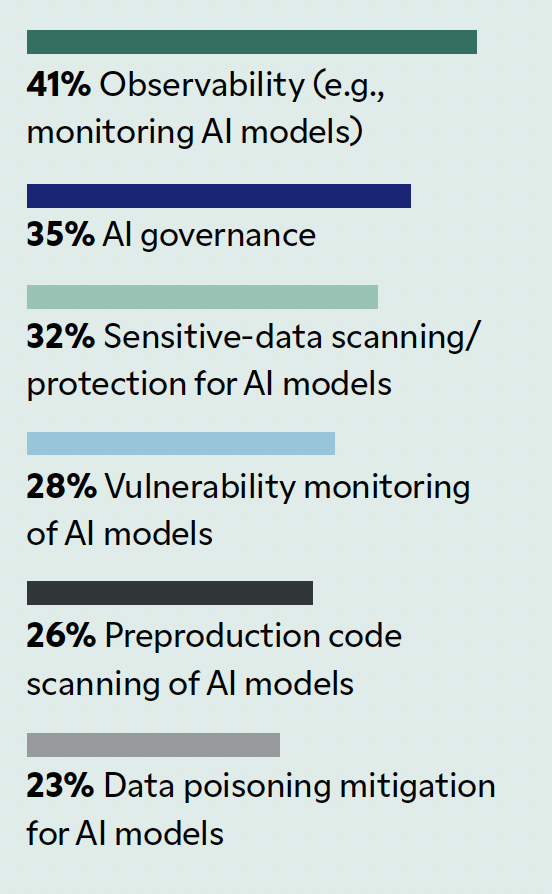

As AI becomes integral to so many aspects of an agency’s operations, the security of those AI models becomes a mission-critical concern. Agencies need to monitor and protect their AI operations from start (gathering data and training models) to finish (ensuring model integrity). Here are the top concerns about cyber-related AI risks, according to a recent survey by McKinsey and Co.:

This article appeared in our guide, “The AI Cyber Arms Race Is On.” For more on how artificial intelligence will shape cybersecurity strategies in 2026 and beyond, download the guide here:

Leave a Reply

You must be logged in to post a comment.