In 2023 so far, at least 27 states and territories have pursued more than 80 bills related to AI, including legislation to study, restrict or prohibit its use.

A new Connecticut law, in particular, zeros in on agency AI dependence. Beginning on Dec. 31, 2023, the state’s Department of Administrative Services will conduct an annual inventory of all AI-based systems used by state entities, and each year the department must confirm that none of those systems leads to unfair discrimination or disparate impacts.

The Connecticut Office of Policy and Management will create policies and procedures governing such AI assessments and clarifying how agencies must develop, procure and implement their AI systems.

And the overwhelmingly bipartisan law established a new working group charged with adopting a Connecticut-specific AI bill of rights based on a White House AI Bill of Rights blueprint. Among other objectives, the working group will make recommendations for additional AI legislation.

Not all risk mitigation is AI-specific, though: Officials also use broader legislation to protect against threats. For instance, state and federal data privacy laws limit AI’s use of personal information.

“Too often,” says the White House’s blueprint, “[AI] tools are used to limit our opportunities and prevent our access to critical resources or services. … These outcomes are deeply harmful — but they are not inevitable.”

Areas of Concern

- Privacy

- Transparency

- Discrimination

- Ethics

- Job loss

- Security

- Accuracy

- Governance

- Data repurposing

- Accountability

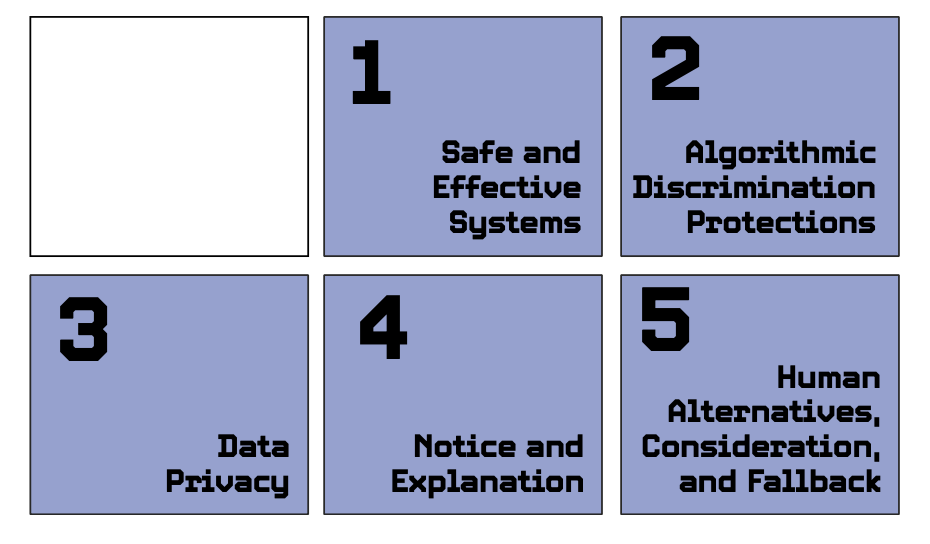

AI Bill of Rights Blueprint

The White House Blueprint for an AI Bill of Rights identifies five principles that should “guide the design, use, and deployment of automated systems … in the age of artificial intelligence.”

New York Takes on Bias in Hiring AI

New York City is being recognized as a “modest pioneer in AI regulation” thanks to a city law that took effect in July 2023.

The law focuses on one application of AI technology — the hiring process — and requires companies that use AI-based hiring software to notify applicants that an AI system is involved and to tell candidates, if they ask, what data was used to make a decision. Companies must have their software independently audited each year to check for bias.

The law defines an “automated employment decision tool” as technology designed “to substantially assist or replace discretionary decision making,” but the rules that implement the law narrowly interpret that definition.

The result is that audits are required only for AI software that’s the sole or primary factor in a hiring decision, or that overrules a human decision. (In most hiring situations, though, a manager is the final arbiter.)

One feature that could become a nationwide trend: Rather than try to explain the mysteries of AI algorithms, the law evaluates bias based on AI impacts — that is, how it affects a protected group of job applicants.

This article appears in our new guide, “AI: A Crash Course.” To read more about how AI can (and will) change your work, download it here:

Leave a Reply

You must be logged in to post a comment.