[Also posted here]

I spent a good deal of time gathering and sifting through the lists of 2011 semifinalists and finalists, cleaning up school names and gathering latitude and longitude information for each of the schools I saw represented in the data sets. At present, I have not had a chance to do the same for the nominees list, because it is so much larger than the other two sets of data. Once I do, I will showcase what I find, hopefully presenting it in a nice interactive tool so that you can see the sheer drop-off in numbers, especially as a function of geographic distribution.

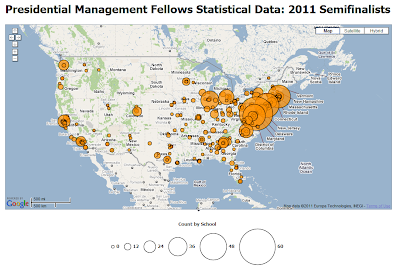

In the mean time, what I present here are two graphics I extracted from my current visualization efforts, which seek to present this year’s PMF program in terms of its geographic distribution. It is of course centered on the US, not only because there are many fewer applicants from non-US schools, but also because I had to have a starting point to make my representation. I will adjust my visualization settings later to indicate the scales of the global distribution of this program, which in some ways out-performs the reach of the PMF program in a certain class of schools within the US (I mean in this case HBCUs, or historically black colleges and universities, whose representation in the PMF program has been marginal in the past). All this is to say that more visualizations are forthcoming just as soon as I can find meaningful ways to express them.

Now let’s get on to some graphics. You will want to open these up to see them full size.

In this first image you can see the geographic distribution of the 2011 semifinalists. The markers are sized according to the number of semifinalists from each of the nominating schools (though see below for some additional detail on my cleanup approach). The legend below indicates the relative sizes, and I should point out that the largest circle is for schools that had 60 or more semifinalists. In all, there were approximately 280 schools represented among the 1530 semifinalists. You will no doubt notice the heavy presence of East Coast schools, especially centered around DC, which should be no surprise; what may be surprising are the volume of semifinalists at Upper Midwest and West Coast schools.

In this second image, which depicts the schools with finalists, you can see a very noticeable decline in the scales of semifinalists and a less noticeable drop in the scope of geographic distribution. Gone is the apparent advantage exhibited in the previous graphic of both the West Coast and Upper Midwest schools. It is quite obvious that East Coast schools are massively overrepresented in this program (and someone has already done a breakdown of degree programs, so we know what that picture looks like). Since there were many schools with single digit nominees, it is also expected that there would be fewer schools represented in the finalists data. From 280 in the semifinalists round, we drop to 210 schools among 858 finalists. That is, approximately 25% of the schools that were represented in the semifinalists data ultimately failed to put forward finalists this year. This is a testament to both the competitiveness of the program and the long road it still has ahead of it to market itself to every eligible graduate school.

Finally, let me talk a bit about the data. The biggest challenge in an operation like this is that with so many data points to deal with, it is incredibly difficult to conduct 100% quality control. There are errors in the data, and I am aware of a few that I have not corrected yet. Additionally, the PMF Program Office, in conjunction with the schools who feed it their nominees, tends to make what I would consider needless distinctions in the school names. For instance, in the lists on the PMF site, you may notice that Harvard has four or five distinct names, one for Harvard University, and the rest for things like the law school, the divinity school, and the like. I realize that students at these schools, and the schools themselves, often pride themselves on such distinctions, but I assure you it makes data analysis an even greater chore. Where possible, I have consolidated schools to the common university names. Besides, it would be utterly meaningless for me to depict semifinalists and finalists at that granularity, because all you would see is a set of concentric circles centered on the latitude and longitude of Harvard, for instance. In addition to name consolidation, I have also expanded each entry to the full text of the school names, which was a prerequisite to gathering the geolocation information. This will become apparent once I am satisfied with and release the interactive tools.

I am interested in what you think of what I’ve presented, both in my approach and in what the data has to say. Also, let me know what other kinds of views you are interested in. My tools are probably capable of generating pretty much anything, so just let me know.

Great visualizations, and thanks for sharing! You clearly had to go through a lot of data cleaning to get to a product that was useful like this.

I took the PMF test in grad school, and definitely wouldn’t have been represented in either of these maps!

I’d be interested to see how the representation of each state changed between the semifinal and final rounds. Like you said, it’s not surprising that the east coast is so heavily represented, but I wonder if representation from the west coast or interior states dropped disproportionately in the finalist round…

I actually realized that the finalist lists I have (2009, 2010, and 2011) include degree fields. I am in the process of consolidating school names right now for 2010 finalists and storing them in a database. Once I clean those up and attempt some year-on-year performance metrics, I hope to revisit the degree field question. Also, if anyone wants access to the data to do your own analysis (or just to join in this effort), I have a tool that is currently feeding out results in JSON format; other formats shouldn’t be hard. Let me know if you want access to it and I will make sure it’s robust enough.

It is absolutely great to see the data. I am looking forward to the finished maps. Thank you.

This is SPECTACULAR. Thank you so much for doing this!

One thing that bugs me, and has bugged a number of my students, is that the cut-off between nominees and semi-finalists is so steep. At the graduate program where I work, we nominated over 40 finalists this year and only had 5 semi-finalists. I started wondering whether certain questions in the online assessment are value-laden to discriminate against folks with a “West Coast” mentality in some way, because I know many East Coast schools have nowhere near the drop-off we experienced.

I also think that the online assessment is much more opaque and even a little suspect in its reliability and validity compared with the in-person assessment. I believe OPM has staff organizational psychologists devising this test, but there is absolutely no information about what they are looking for, and each year the psychological assessment in particular seems to be a totally bizarre set of questions. With over 2 million staff, how can there be only one “right” personality for a leader in the federal government?? And why are most of the people with the “right” personality from the East Coast?