Prior to joining the public sector, I worked in the private sector for eight years, often as the first user experience designer on a team, hired on to build out the team’s design practice to better serve our users’ needs. I’d meet a lot of people who were immensely interested in design thinking and bringing human-centered design practices to their teams. There’s the ideal process, or what you learn as the five steps to implement on projects, from empathizing to testing, and what actually happens in practice on a team. Anyone who has experience with change management can attest to this – it is important to adapt any methods to the culture and environment where you’re introducing these methods.

Prior to joining the public sector, I worked in the private sector for eight years, often as the first user experience designer on a team, hired on to build out the team’s design practice to better serve our users’ needs. I’d meet a lot of people who were immensely interested in design thinking and bringing human-centered design practices to their teams. There’s the ideal process, or what you learn as the five steps to implement on projects, from empathizing to testing, and what actually happens in practice on a team. Anyone who has experience with change management can attest to this – it is important to adapt any methods to the culture and environment where you’re introducing these methods.

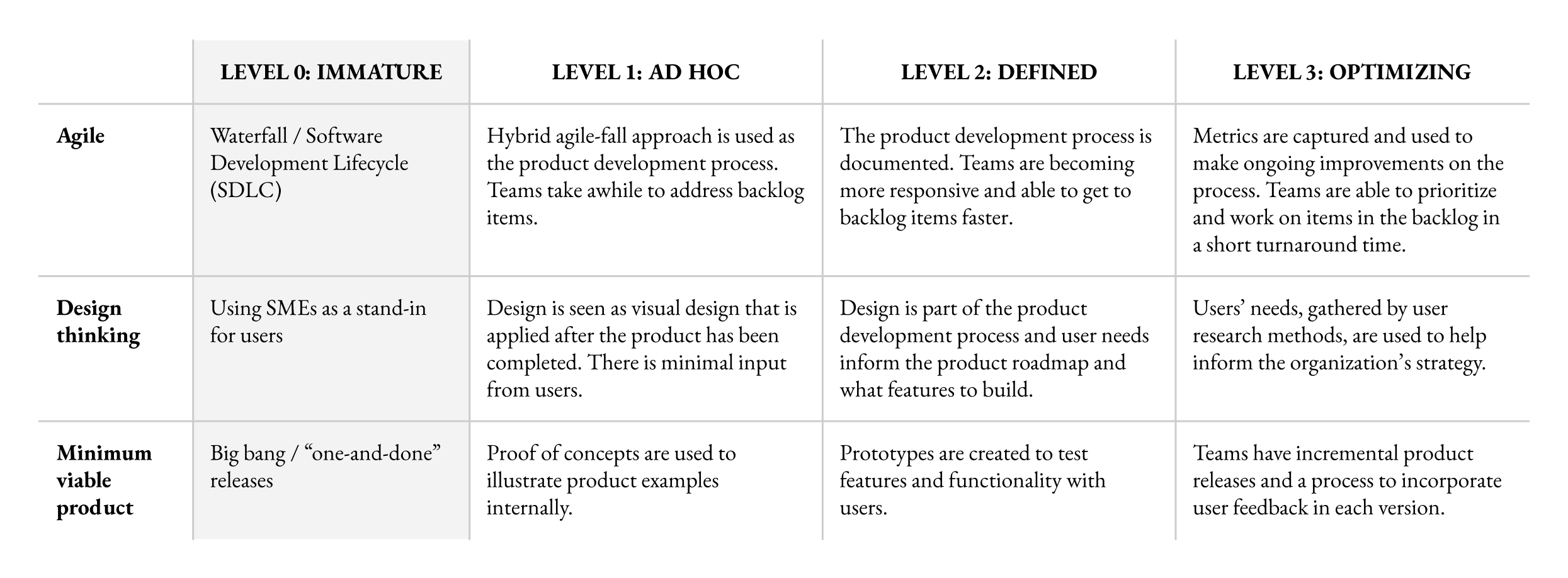

So what concepts and methods am I talking about? All within the realm of “digital transformation,” tech buzzwords litter the internet and our inboxes. Agile, big data, cloud computing, design thinking and minimum viable product (MVP) are a few phrases that stand out. Instead of dismissing these terms as something only the tech team needs to know, there is a lot of value in folks on the program side understanding them.

On one of my government projects, I was a bridge between the program and technical teams. We had a program analyst who was in charge of the “business” needs for their group and hadn’t ever worked with a technical team before, and a technical lead who was an expert in their system but unfamiliar with the program operations. After the three of us worked together closely, the program analyst saw the value of being “fluent” at a high-level from a technical perspective helped support the services they provided to their customers.

In this first of a two-part series, I’ll start with a brief overview of three of these terms and some of the common pitfalls I’ve seen when implementing them in organizations. In my next post, I’ll share some of the best practices I’ve learned when introducing teams to these new concepts. The idea is that teams and organizations don’t just jump headfirst, but see themselves in a process where they mature their capabilities in these concepts over time.

1. Agile software development

Atlassian defines Agile as an iterative approach to project management and software development that helps teams deliver value to their customers faster and with fewer headaches. I qualify Agile with software development because the term has also been used to describe a mindset. To me, this is the difference between big “A” Agile and little “a” agile, the former being the software development methodology and the latter the mindset.

Agile is about delivering value to users in small increments so teams can quickly respond to changes. I’ve joined many teams where Agile methodology was used but I’d dig in further to find that teams were going through and checking off the practices but missing the purpose behind them. For example, teams would participate in daily stand-ups but weren’t actually communicating meaningful status updates. The one misunderstanding I saw the most would be teams that believed they were doing Agile but were actually doing Agile-fall, a combination of Agile and waterfall methods. This meant that teams were iterating in certain parts of the process and keeping the existing waterfall process for the rest, often getting the worst of both methods in the process.

Download the Agile for Everyone Guide for in-depth agile techniques for teams.

2. Design thinking

Tim Brown, CEO of IDEO, defines design thinking as a human-centered approach to integrate the needs of people, the possibilities of technology and the requirements for business success. In introducing this process to teams, I’d often encounter the misconception that design was only visual or graphic design was only implemented after a solution had been created. Designers would then have to combat this notion and show the value in design thinking using methods like problem framing, co-designing with users and testing and prototyping.

The design thinking process can also vary depending on what level it is being used at. Leveraging the process at the feature-level for a product is very different from leveraging it at the strategy-level for a department or an entire organization. Additionally, teams that are able to incorporate a user research cadence may find synthesizing the results to be difficult. It’s easy to get overwhelmed with all of the information and insights you may have gathered and struggle to find trends or a direction forward. I’ve seen teams lose momentum after research resulting in management shrugging off the practice entirely.

Read an introduction to design thinking for more information about this process.

3. Minimum viable product (MVP)

In determining what to launch, product development teams often scope features for a minimum viable product (or MVP). Eric Ries, author of the Lean Startup, defines MVP as a version of a usable product that allows teams to collect insights from customers to help refine the product over time. I’ve had a lot of program managers and teams in government react negatively to hearing the term “MVP” thrown around. Honestly, there is some validity in that feeling. The way IT projects are structured often leaves teams at this “one-and-done” mentality. There may not be subsequent product launches so there is this thinking that every feature needs to be included in the first launch. Teams are afraid that they won’t be able to build on one release after another. To be honest, there are a lot of changes that need to happen to help government adopt this method, but that’s a whole other article for another day.

I also see a lot of product teams struggle to define the “right” MVP. Teams will focus on developing and launching one function of a feature versus an end-to-end feature that allows their users to accomplish one of their goals. For example, a team I joined was focused on flushing out every use case for a login flow. After a story-mapping exercise, the team was able to identify and prioritize a user goal of applying and receiving grant funds, and refocused their energy on building out the minimum features needed to support and launch this functionality.

What’s next?

Instead of going all in, start small first. This back of the napkin diagram I did shows a high-level maturity model for these three concepts to help you understand where you might be and the steps to get to the next level:

The best way to learn these concepts is to do them. Like I mentioned, the actual implementation is very different from the textbook process. You learn a lot by being on a project utilizing these methods and pick up a lot of on the ground experience steeped in realism, and also learn to pivot and make changes as you go along.

Stay tuned next week for “Part 2: Implementing Tech Best Practices for Government Teams.” In the meantime, check out the Digital Services Playbook for more tech best practices.

Interested in becoming a Featured Contributor? Email topics you’re interested in covering for GovLoop to featuredcontributors@govloop.com. And to read more from our Winter 2021 Cohort, here is a full list of every Featured Contributor during this cohort.

Jenn Noinaj is a social impact strategist, researcher, and designer passionate about using design to solve society’s most pressing challenges. She’s currently leading the Public Interest Technology Field Building portfolio at the Beeck Center for Social Impact + Innovation where she works on creating solutions to make the public interest technology field more inclusive. Prior to this role, she worked in the federal government at the US Digital Service where she partnered with various agencies to transform digital services across government, building capacity in technology and design and championing a user-centric culture. You can find more about her on her website and can follow her on LinkedIn and Twitter.

Leave a Reply

You must be logged in to post a comment.