Q1. WHAT IS MEANT BY THE TERM “TECHNOLOGY GOVERNANCE”?

A1. Note that the terms GOVERNANCE includes:

- RESPONSIBILITY – being held accountable for a specific project, task, activity or decision;

- EXERCISING AUTHORITY – the power to influence behavior;

- COMMUNICATION – clarifying and exchanging information);

- EMPOWERING – giving official authority to act

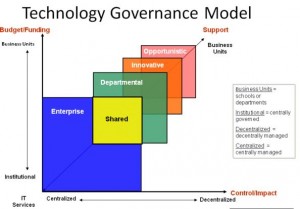

Governance also involves establishing measurement and control mechanisms through specific techniques and automated tools that enable staff to carry out their roles and responsibilities. Using this definition as a guideline, TECHNOLOGY GOVERNANCE is a compilation of the formalized governing practices (demarcated responsibilities of decision makers utilizing a federal agency’s defined enterprise architecture framework) for linking and aligning the agency’s strategic plan (vision, mission, goals, objectives, strategies and performance indicators) to oversee its agency technological capability and interoperability in the planning, utilization, maintenance and disposal of agency technology.

Q2. WHY IS THE TERM TECHNOLOGY AND NOT INFORMATION TECHNOLOGY BEING USED?

A2. Information technology was broadly defined as integration of a computer with telecommunication equipment for storing, retrieving, manipulating and storage of data. However, the advent of thee Internet of Things (IoT) requires agencies to broaden their management horizon and learn new techniques to manage these “new” technological assets. IoT, broadly defined, is the network of physical objects or “things” embedded with electronics, software, sensors, and network connectivity, which enables these objects to collect and exchange data. An example of this is Ms. Laverne Council, Assistant Secretary for Information and Technology and Chief Information Officer at the U.S. Department of Veterans Affairs (VA). Ms. Council has established a new vision for the future of technology through the improvement of the experience of veterans by utilizing emerging technologies like IoT. https://veterans.house.gov/witness-testimony/the-honorable-laverne-council

Q3. WHAT ARE THE TALE-TALE SIGNS OF WEAK OR NON-EXISTENT TECHNOLOGY GOVERNANCE IN AN ORGANIZATION?

A3. For effective technology governance to exist, the federal agency must undertake ENTERPRISE PLANNING CONGRUENCE – e.g. the clear and simple understanding of how technology specifically supports the published goals, objectives and initiatives clearly tying them together. Many times a very casual relationship exists or none exists at all. When casual relationships exist, then technology initiatives sprout that have no bearing on the agency’s strategic goals, objectives, strategies, or initiatives. In such instances three participants – 1) agency enterprise-wide, 2) agency program, and 3) agency technology management — begin to wonder why a specific technology venture is even being deployed. Several questions whose responses provide clear and specific relationships include:

- Why is this technology project or initiative being undertaken?

- How this technology project or initiative fit into the Agency’s published Enterprise Architecture?

- How does this technology project specifically support the agency’s:

- Strategic Plan?

- e.g. Cross-Agency Priority Goals

- e.g. Agency Priority Goals

- Human Capital Strategic Plan?

- Technology Plan?

- Financial Planning results within the Annual Financial Report (AFR) and Performance Accountability Report (PAR) results?

- Acquisition Plan?

- Security Plan?

- Performance Management (e.g. the Agency’s Stat System – a performance management technique that includes the regular review of operational data; discussions on whether programs, services, and strategies are performing as expected; and rapid decisions to correct problems) ?

- Strategic Plan?

ENTERPRISE PLANNING CONGRUENCE would be performed as part of the Capital Planning and Investment Control (CPIC) process that includes assesses of the congruency of proposed technology projects or initiatives within the planning components listed above.

Q4. WHAT DOES “CAPABILITY” HAVE TO DO WITH TECHNOLOGY GOVERNANCE?

A3. For context, the use of continuous process improvement is based on many small evolutionary steps rather than larger revolutionary innovations. There is a methodology use by “Best-In-Class” technology organizations called the Capability Maturity Model (CMM) http://www.sei.cmu.edu/reports/93tr024.pdf they use to develop and refine their organization’s software development process. This model describes a five-level evolutionary path of increasingly organized and systematically more mature processes. CMM provides a framework for organizing these evolutionary steps into five maturity levels that lay successive foundations for continuous process improvement. By utilizing methodologies like CMM, federal agencies may be able to assess their “Governance Capability” used to evaluate their respective Governance, Risk Management and Internal Control processes based on different concepts like

- (1) System based evaluations of existing internal controls against recognized control frameworks and are applicable to set up Process Reference Models; and

- (2) Reference Models reviews that provide transparent methods for assessing performance of relevant internal control processes and tools for assessing control risk areas based on the gaps between target and assessed capability profiles.

Technology Governance involves an internal examination of a federal agency’s capabilities to:

- Execute a Technology Governance Assessment – a1. Execute an assessment to identify gaps; a2. Delineate new role of technology in an agency particularly in regard to IoT and Social Media management; a3. Define evolution roadmap to address the gaps.

- Establish a Technology Governance Framework – b1. Define intra-agency roles and responsibilities; b2. Setup communication path to support agency program technological alignment; and b3. Define management structures for decision making, reporting and escalation

- Develop and Design Technology Governance Processes – c1. Develop and design agency technology governing policies; c2. Develop and design agency technology governing processes; c3. Develop and design agency technology Key Performance Indicators (KPIs) and reporting requirements.

- Implement Technology Governance Supporting Techniques and Tools – d1. Select techniques to be utilized in the technology initiative assessment and evaluation processes; d2. Implement automated tools that utilize the selected techniques for traceability to support the execution of the technological solution; d3. Implement automated tools utilizing the selected techniques for data collection and agency management reporting.

- Design, Establish and Implement a Continuous Improvement Plan to Control the Lifecycle – e1. Identify, select, implement and monitor the lifecycle processes utilized by the agency for making technology investment decisions, assessing technology investment process effectiveness, and refining technology investment policies, procedures and reporting. e2. Identify milestones and indicators to monitor the execution of strategies; e3. Define, develop and implement a Steering Committee to manage the relationships between and within program, finance, acquisition, human capital, facilities, cyber-security and technology functions within the agency related to technology initiatives.

Q5. WHY IS ENTERPRISE ARCHITECTURE THE MOST EFFECTIVE STARTING POINT FOR IMPROVING THE CONGRUENCY OF TECHNOLOGY GOVERNANCE?

A5. In spite of efforts of the Federal Government industry to identify and adopt best practices in the development of technology IT projects and initiatives, there is still a high rate of failure and missed objectives. Many technology projects do not meet may the agency’s goals, objectives and strategies. The ultimate solution will be the integration of disparate technology planning methodologies and initiatives, however, the most effective starting point would be the linkage and alignment of Agency Architectures by answering the following questions:

5.1 – STRATEGIC ARCHITECTURE – IS THE AGENCY DOING THE RIGHT THINGS?

Q5.1.1 Does the agency’s strategic architecture address critical external environmental factors from which the initial strategies were based upon?

Q5.1.2 Is there a framework in the agency that links strategy to function, organization, process, activity and task to technology to determine whether they are aligned?

Q5.1.3 Who manages this framework on who keeps it current?

5.2 – TECHNOLOGY GOVERNANCE – IS THE AGENCY GETTING ITS EXPECTED BENEFITS?

Q5.2.1 Are the process and activity measurements (inputs, outputs and outcomes) communicated in a meaningful way that encourages management action?

Q5.2.2 Are Key Performance Indicators (KPIs) identified and managed across the agency?

Q5.2.3 Are targeted results communicated on a frequent basis?

5.3 – BUSINESS ARCHITECTURE – ARE WE DOING THINGS THE BEST WAY?

Q5.3.1 Are the agency’s business processes defined and modeled (Entity Relationship Diagram, Data-Flow Diagram, Data Dictionary)?

Q5.3.2 Are the processes engineered to eliminate non-value added tasks and activities carried out by employees, consultants, and suppliers to reduce controllable Cost Drivers?

Q5.3.3 Is the agency purpose for the application of technology to automate current techniques to improve process and activity performance?

5.4 – PERFORMANCE ARCHITECTURE – DOES THE AGENCY MANAGE BY INTUITION AND HISTORY OR DOES IT MANAGE BY FACTS AND DATA?

Q5.4.1 How does the agency define what we mean by “successful performance”?

Q5.4.2 Does everyone in the agency have the same definition as to what constitutes “successful performance”?

Q5.4.3 How does the agency strive to exceed performance targets?

Q5.4.4 Has the agency identified the key performance indicators for the strategies and business processes and activities?

Q5.4.5 How does the agency measure and capture and re-utilize the results of processes and activities?

Q5.4.6 Is there both transparency and traceability in the measurement process?

5.5 REFERENCE MODELS – IS THE AGENCY CONSTANTLY UPDATING AND UTILIZING THE FEDERAL ENTERPRISE ARCHITECTURE (FEA) REFERENCE MODELS WHEN PLANNING, DESIGNING, MAINTAINING AND REPLACING THEIR TECHNOLOGY?

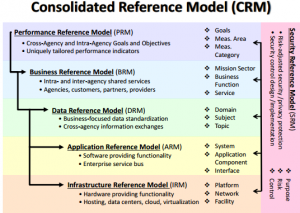

As Federal agencies typically have a Chief Acquisition Officer, Chief Financial Officer, Chief Information Officer, and Performance Improvement Officer – or employees with responsibilities for these roles – the utilization of the respective agency’s “Consolidated Reference Model” to link and align acquisition, financial, information systems, performance and security initiatives would fit neatly within one of the respective Reference Models. The questions to be asked are:

As Federal agencies typically have a Chief Acquisition Officer, Chief Financial Officer, Chief Information Officer, and Performance Improvement Officer – or employees with responsibilities for these roles – the utilization of the respective agency’s “Consolidated Reference Model” to link and align acquisition, financial, information systems, performance and security initiatives would fit neatly within one of the respective Reference Models. The questions to be asked are:

Q5.5.1 Does the agency have a published Performance Reference Model Taxonomy with definitions?

Q5.5.2 Does the agency have a published Business Reference Model Taxonomy with definitions?

Q5.5.3 Does the agency have a published Performance Reference Model Taxonomy with definitions?

Q5.5.4 Does the agency have a published Data Reference Model Taxonomy with definitions?

Q5.5.6 Does the agency have a published Application Reference Model Taxonomy with definitions?

Q5.5.7 Does the agency have a published Infrastructure Reference Model Taxonomy with definitions?

Q5.5.8 Does the agency have a published Security Reference Model Taxonomy with definitions?

With the advent of the Digital Accountability and Transparency Act of 2014 (DATA Act), effective Technology Governance is required to complete these Reference Models, insuring that current databases are normalized and that future acquired technology or leased technology has its data tables structured so that they can be easily linked with data tables in other applications. This will need data governance as a control that ensures that the data entry by an agency employee, shared services provider, commercial service provider, or by an internal or external automated process meets agency standards, such as a business rule, a data definition and data integrity constraints in the data model. This will take time to develop and implement, but it will prove to be a valuable long-term effort.

Leave a Reply

You must be logged in to post a comment.