Think about the critical applications that support the work you do every day. What data from those systems do you rely on to make timely decisions? When you think about data availability in these terms, it becomes a top-of-mind issue for all employees.

The bottom line: Data availability is vital for us all, whether we realize it or not. Ensuring applications are always available and deliver on the service level agreements that your 24/7 operations demand, whether virtual, on-premises or in the cloud, is critical.

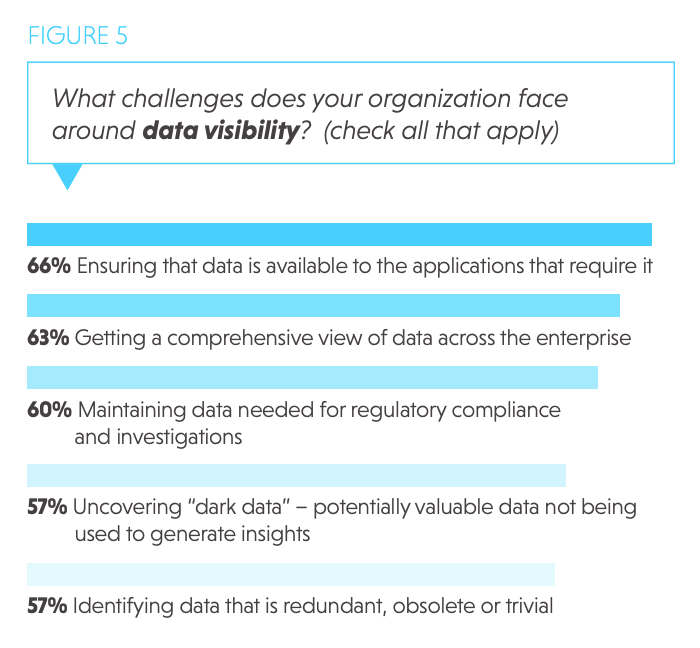

Our survey data confirms data visibility is a challenge on all fronts, making it equally tough to maintain compliance, identify redundant and dark data, and ensure data is available across applications (See Figure 5).

Respondents also shared in their own words what visibility challenges they face:

- “Sharing databases between departments across state government.”

- “Collaborating on access, custody, distribution, archival, and reporting on data within various user groups.”

- “Getting management to enforce staff [to] stop storing [data] in thousands of Microsoft Excel spreadsheets on their own without communication. Makes compiling for any global requests almost impossible.”

A 2018 Defense Department (DoD) Inspector General (IG) report illustrates how closely aligned these data visibility challenges really are. The report, citing a July 2018 memorandum from the department’s chief information officer to DoD officials, stated that at the time DoD had not reported over 30% of its software inventory.

By law, all agencies are supposed to track their software inventories. Because DoD and its components had not completed that inventory, they lacked visibility over their assets, according to the IG report. This meant they were also unable to determine the extent of existing vulnerabilities that could impact operations if information processed, stored or transmitted by software applications was compromised.

Data visibility is further complicated when agencies continue to amass redundant, obsolete and trivial (ROT) data. This ROT data is stored in government systems and continues costing money and obscuring more relevant data.

“We see redundancy in data sets in pretty much every environment that you come across, especially with unstructured content,” said Mike Malaret, Director of Sales Engineering for the U.S. Department of Defense and Intelligence Communities at Veritas. “It’s important to be able to have insights into the utilization of that data. There is great benefit in seeing where data exists, where the duplicate data is located and what orphan data is in your environment.”

Orphan data is data that doesn’t have ownership, which can apply to large amounts of data that have grown exponentially over time. But if agencies can’t account for this data, they can’t begin to assess if it is necessary and what value it actually serves. Having tools that can identify that data is critically important, Malaret said.

Armed with this information, agencies can prioritize what data is most critical to their mission requirements and begin to shed their systems of ROT data — freeing up resources and reducing the time it takes to analyze data.

This article is an excerpt from GovLoop’s recent report, “A New Approach to Managing Data Complexity.” Download the full report here.

Leave a Reply

You must be logged in to post a comment.