“The great truism that underlies the civic technology movement of the last several years is that governments face difficulty implementing technology, and they generally manage IT assets and projects very poorly.” – Mark Headd

In 1662, the Licensing of the Press Act in England required that printing presses should not be set up without notice to the Stationers’ Company. A king’s messenger had the power to enter and search for unlicensed presses and printing.

Satire from ‘Grub Street Journal’, London, 1732

Each era and each government, in its own unique way, has navigated the intricate path of integrating emerging technologies into the fabric of its society. This challenge is amplified by the inherent complexities and bureaucratic hurdles that often characterize governmental structures. However, the critical need for regulatory oversight cannot be understated. It ensures the balance between innovation and public welfare.

Today it is artificial intelligence (AI) — a technology that promises to transform governance, from improving public services to enhancing decision-making and policy formulation. However, it also raises significant questions about ethics, privacy, and accountability. All this demands careful consideration and strategic planning.

In this blog, we take a stab at decoding the 2023 Government AI Readiness Index and use it to explore the nuances of AI adoption in North America and Western Europe. We also examine the opportunities and challenges for nations in adopting AI and the role of international collaboration in this journey.

Overview of the 2023 Government AI Readiness Index

The 2023 Government AI Readiness Index produced by Oxford Insights was published on December 6, 2023. The report evaluates and ranks the readiness of governments worldwide for the implementation and integration of AI in public services. The 2023 edition has expanded its scope to include 193 countries, up from 183 in the previous year.

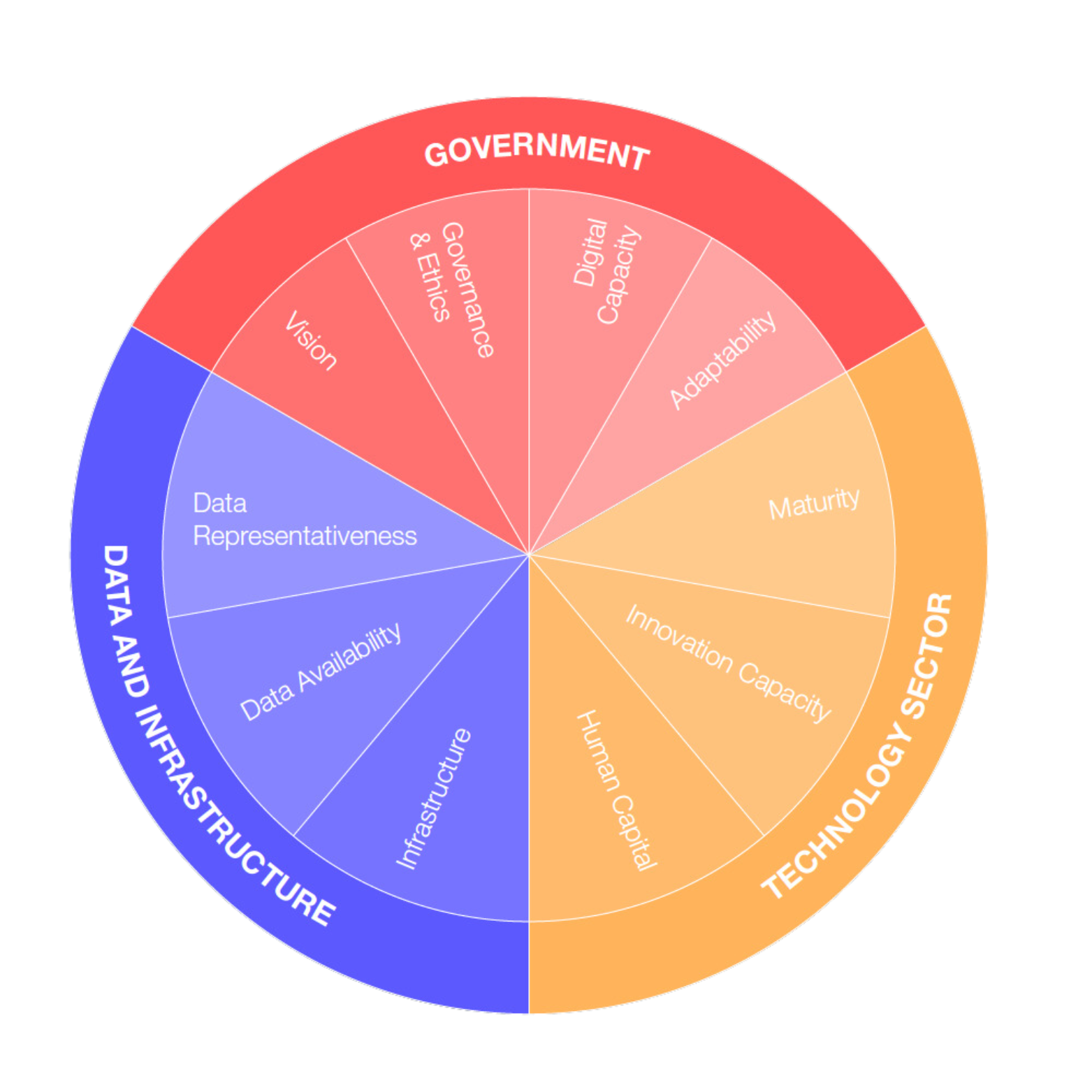

The report is structured to provide a detailed analysis across three main pillars: Government, Technology Sector, and Data & Infrastructure. Each of these pillars is assessed based on various indicators to determine a country’s overall AI readiness. The report delves into global findings, regional reports, and key developments in AI governance, offering insights into trends, strategies, and policies adopted by different countries and regions.

Additionally, the report covers the broader context of AI in governance, including ethical considerations, international collaboration, and challenges faced by different income groups and regions in adopting AI technologies. It aims to provide a comprehensive understanding of the current global AI landscape in governance, highlighting disparities, advancements, and potential areas for development in different parts of the world.

Comparing Models and Approaches in AI Adoption: North America vs. Western Europe

The 2023 Government AI Readiness Index covers 193 countries, including 35 in North America and Western Europe. These countries are among the most advanced in AI adoption, with the United States, Canada, Germany, and France ranking in the top 10 globally. These regions, in line with their historic competitiveness and socio-economic and political complexities, have adopted distinct models and approaches to AI adoption.

North America: Technological Innovation and Private-Sector Leadership

In North America, particularly in the United States, there is a strong emphasis on technological innovation and private-sector leadership. The U.S. government’s approach can be characterized by its focus on fostering a robust AI industry, promoting research and development, and encouraging private-public partnerships. This is evident in the U.S.’s lead in the Technology Sector pillar of the AI Readiness Index. The recent Executive Order by the U.S. President reflects a comprehensive approach to integrate AI across various federal agencies, emphasizing the creation of standards for AI use and the appointment of AI governance bodies within agencies.

Canada, while sharing similarities with the U.S. in its approach to AI, also places a strong emphasis on ethical considerations and public welfare. Canada’s AI strategy includes significant investments in AI research and development but with a clear mandate for responsible and ethical AI development.

Canada’s AI strategy showing Commercialization, Standards, and Talent and Research as core pillars

Western Europe: Regulatory and Collaborative Approach

In contrast, Western Europe, particularly within the European Union, adopts a more regulatory and collaborative approach. The region’s strategy is heavily influenced by the European Union’s AI Act, which aims to set standards for AI use across member states, ensuring that AI development is aligned with ethical and human rights principles. The European approach is more homogeneous, with a significant emphasis on regulation, ethics, and public-sector applications of AI. Western European countries also demonstrate a high level of performance in governance, reflecting their strategic and regulatory approach to AI.

In an illustrative case of Western Europe’s nuanced approach to AI regulation, Italy took a measured step regarding ChatGPT at the end of March and banned the chatbot over privacy concerns. This development, marking Italy as the first Western country to address concerns with the platform, stemmed from the country’s data protection authority. They pointed out potential issues with ChatGPT’s data collection and storage practices, particularly questioning the legal basis for using personal data to train its algorithms. Additionally, the authority expressed concerns over the management of inaccurate information from the platform, with a specific focus on protecting younger users from inappropriate content. Italy’s approach reflects a broader trend in Western Europe, where there is a careful consideration of the balance between technological innovation and upholding ethical and privacy standards.

Key Takeaways

The differences in these approaches are likely to influence their success in AI integration within governance. North America’s model, led by technological innovation and private-sector dynamism, may lead to rapid advancements in AI applications but could face challenges in ensuring equitable access and ethical considerations. On the other hand, Western Europe’s regulatory and collaborative approach may lead to more standardized and ethically aligned AI applications but potentially could slow down the pace of AI innovation and adoption.

The Role of International Collaboration and Looking East

International collaboration is crucial in AI development, especially for nations seeking to bridge their AI readiness gap. Collaborating with countries that have advanced further in AI adoption provides a unique opportunity to learn from their experiences, adopt best practices, and leverage their technological advancements while being mindful of local context and priorities.

For example, Asian countries like India, China, South Korea, and Indonesia offer valuable insights. These countries have made significant strides in AI, each with distinct models and approaches that could inform different governments’ AI strategies.

India, for instance, has focused on developing AI for social good, aiming to apply AI solutions to address challenges in healthcare, agriculture, and education. This focus on socially relevant AI applications could offer practical models for other countries where similar social challenges exist.

China’s approach has been more state-driven, with substantial government investment in AI research and development. This has led to rapid advancements in AI technology, although it also raises questions about surveillance and ethical concerns. Different countries can learn from China’s technological advancements while being mindful of these ethical considerations.

South Korea and Indonesia have also made notable AI progress. South Korea’s strong focus on research and innovation, coupled with its robust technological infrastructure, offers lessons in building a conducive environment for AI growth. Indonesia’s approach, balancing AI development with regulatory frameworks, could be particularly relevant for nations considering similar regulatory needs.

Engaging with these Asian countries could provide different nations with technical expertise, training opportunities, and potential partnerships. Such international collaboration, coupled with learning from diverse AI adoption models, can support countries in developing AI strategies that align with their unique needs and contexts.

Conclusion

As different governments navigate the complex landscape of AI adoption, it is crucial to recognize their agency in shaping their own AI futures. The journey towards AI readiness and effective implementation is not merely about technological adoption but involves a strategic alignment with each nation’s unique contexts and developmental goals.

A proactive approach is essential. Rather than passively adopting technologies developed elsewhere, countries have the opportunity to innovate AI solutions that address their specific challenges and priorities. By developing national AI strategies that reflect local needs, countries can ensure that AI technology serves their citizens effectively and ethically.

Ultimately, the AI journey for different nations is one of balance — balancing innovation with ethical considerations, balancing local needs with global trends, and balancing independent development with international collaboration. By striking this balance, nations can harness the transformative power of AI to drive progress and prosperity in the digital age.

Beyond Tech and Data: Incorporating Environmental and Social Indicators in AI Readiness Assessments

The 2023 Government AI Readiness Index, while comprehensive in certain aspects, overlooks indicators such as:

- AI Power Usage: The power consumption of AI systems directly contributes to an entity’s carbon footprint. With AI becoming more complex and data-intensive, its energy demands are rising, making it essential to consider energy efficiency and renewable energy sources in AI deployments. This indicator would reflect the environmental sustainability of AI initiatives.

- Climate Impact: In its applications, AI can contribute to climate change mitigation and adaptation. For instance, AI can be used to improve the efficiency of energy systems, optimize transportation, and enhance climate modeling. This indicator would reflect the extent to which AI initiatives contribute to climate change mitigation and adaptation.

- Inclusivity in Board Representation: This indicator is key in assessing how diverse and inclusive AI governance structures are within government entities. It measures the extent to which decision-making bodies reflect diverse perspectives, including gender, ethnicity, and professional backgrounds. This is vital for ensuring that AI policies and strategies are equitable and considerate of all segments of society.

- Alignment with Sustainable Development Goals (SDGs): Evaluates how government AI initiatives align with the United Nations’ SDGs, ensuring that AI developments contribute to global sustainability objectives and address key societal challenges.

Inclusion of these metrics could offer insights into how AI initiatives align with broader sustainability objectives, ensuring that technological advancements do not come at the expense of environmental and social responsibilities.

The Licensing of the Press Act 1662: What happened next?

“An Act for preventing the frequent Abuses in printing seditious treasonable and unlicensed Books and Pamphlets and for regulating of Printing and Printing Presses.” — the long title of the Licensing of the Press Act of 1662

Having expired in 1695, it was officially repealed by the Statute Law Revision Act 1863, which repealed a large set of superseded acts.

The Licensing of the Press Act 1662 was repealed for several reasons:

- Monopoly of the Stationers’ Company: The Act had given the Stationers’ Company a monopoly, which led to an increase in the prices of books and a decrease in their quality.

- Violation of Property Rights: The Act allowed authorities to search all houses on the suspicion of having unlicensed books, which was seen as a violation of property rights.

- Change in Administration: There were changes in the licensers from different political parties, which led to instability.

- Growth of the Press: After the Act lapsed, the London and provincial newspaper press established itself within a decade.

After this, the restrictions on the press then took the form of prosecutions for libel. The repeal of the Act marked a significant step towards press freedom.

David Lemayian is a software developer, technology advisor, and mission-driven entrepreneur with experience building teams, tools, and platforms. He helped kickstart Code for Africa (CfA), the continent’s largest network of open data and civic technology labs, and until recently served as its Chief Technologist / CTO and Executive Director. David continues to use his experience in web technologies, mobile applications, artificial intelligence, digital security, and Internet of Things (IoT) to support organizations, non-profits, and governments to execute on their technology and data strategies.

Leave a Reply

You must be logged in to post a comment.